The simplest form of Retrieval-Augmented Generation—embed documents, retrieve chunks, pass to an LLM—remains a useful prototyping pattern, but rarely survives the transition to production constraints. By mid-2024, production RAG systems evolved into sophisticated retrieval pipelines combining hybrid search, cross-encoder reranking, and query transformation. The challenge isn't whether to use RAG, but how to architect systems that actually work at enterprise scale.

This guide addresses the architectural decisions that separate proof-of-concept demos from production knowledge systems. We examine vector database selection, retrieval strategies that improve recall by 40-70% over naive approaches, and the critical question of when simpler solutions outperform specialized infrastructure.

The Vector Database Decision Matrix

The vector database market has stratified into clear tiers. The choice depends less on feature checklists and more on understanding three thresholds: dataset size, acceptable latency, and your team's existing infrastructure.

When pgvector is Enough

PostgreSQL with the pgvector extension handles more production workloads than vendors acknowledge. For datasets under 5 million vectors where query latency of 100-200ms is acceptable, pgvector offers compelling advantages beyond cost savings.

The primary strength isn't vector search performance—it's hybrid query capability. Because pgvector operates within PostgreSQL, you execute SQL filters alongside vector similarity in a single atomic query. This eliminates the multi-step orchestration required when using dedicated vector databases: query the vector store for IDs, then join against PostgreSQL for metadata filtering. That architectural simplification alone justifies pgvector for many enterprise use cases.

Performance boundaries: pgvector degrades non-linearly beyond 10 million vectors. For datasets exceeding that scale or requiring consistent sub-50ms latency, purpose-built databases become necessary. But the threshold is higher than many assume—recent benchmarks show well-tuned pgvector deployments outperforming entry-tier managed services at a fraction of the cost.

When to use: Existing PostgreSQL infrastructure, heavy metadata filtering requirements, datasets under 5M vectors, and teams that value operational simplicity over raw millisecond performance.

Purpose-Built Vector Databases

Three distinct use cases justify moving beyond pgvector:

Qdrant (1M-100M vectors): Rust-based performance delivers sub-10ms p95 latency with advanced filtering. The resource efficiency matters—ingestion throughput of 50-100K vectors/sec means faster index rebuilds during schema changes. Best for real-time applications where latency is non-negotiable.

Weaviate (1M-100M vectors): Multi-modal vector search with native hybrid retrieval. The GraphQL API enables sophisticated queries combining vector similarity with relationship traversal. Particularly strong for knowledge graph integration (GraphRAG) where understanding connections between entities matters as much as semantic similarity.

Milvus (100M+ vectors): Distributed architecture with separation of compute and storage. Handles billions of vectors with 200K+ vectors/sec ingestion. The 11+ index types (HNSW, IVF, DiskANN) allow optimization for specific workload characteristics. Necessary for massive enterprise deployments or multi-tenant SaaS requiring horizontal scaling.

The Decision Framework

The market has created a "performance floor"—free, high-performing options like pgvector force specialized databases to justify value through extreme scale, managed convenience, or capabilities beyond pure vector search.

RAG Maturity: From Naive to Agentic

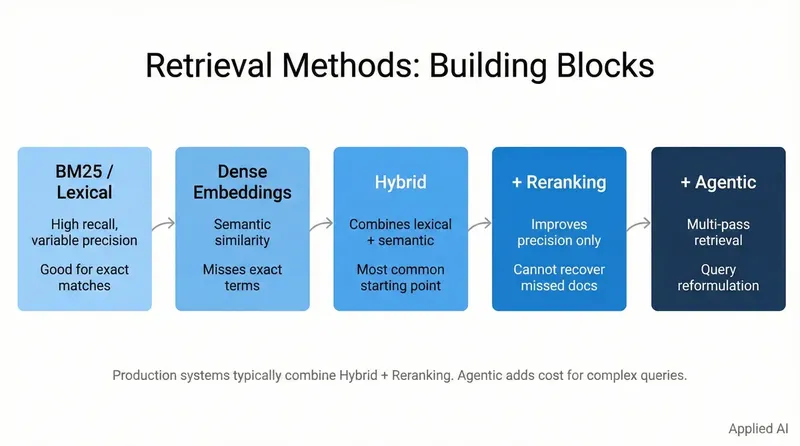

Understanding RAG's evolution clarifies which patterns apply to specific use cases. The progression isn't chronological—it's a maturity spectrum where different applications require different sophistication levels.

Naive RAG: The Prototyping Baseline

The original pattern: chunk documents, generate embeddings, retrieve top-k similar chunks via cosine similarity, pass to LLM. This approach remains useful for quick prototypes but typically fractures under three production stress points:

- Low precision: Semantic similarity doesn't guarantee relevance. Documents discussing "Java the programming language" and "Java the island" may have similar embeddings in certain contexts.

- Recall gaps: Critical information containing exact product codes or technical terms gets missed when embeddings fail to capture lexical precision.

- No answer verification: The system has no mechanism to determine if retrieved context actually supports the generated answer.

- Poor handling of multi-hop questions: Questions requiring synthesis across multiple documents ("How did the proposed regulation impact Company X's Q3 strategy?") fail when retrieval focuses on single chunks.

Success rates of 10-40% for naive RAG in enterprise environments drove rapid evolution to advanced patterns.

Advanced RAG: The Production Standard

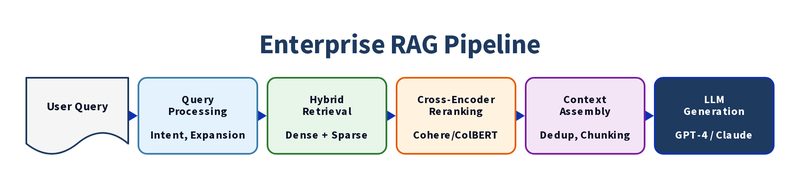

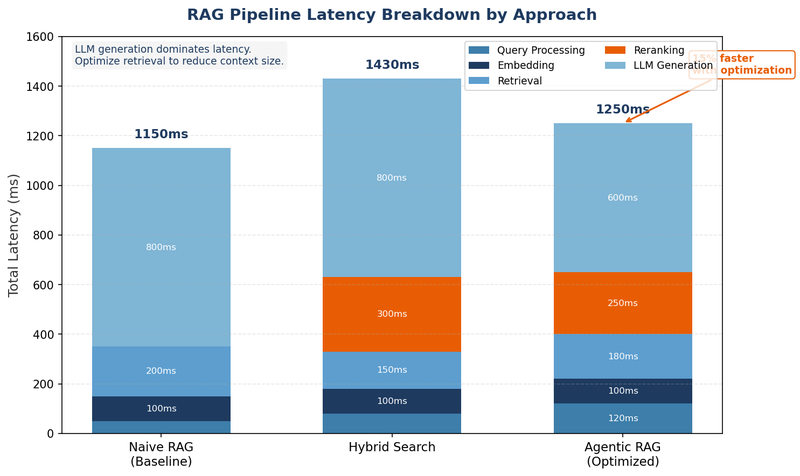

State-of-the-art systems in 2025 employ multi-stage pipelines addressing naive RAG's failure modes:

Hybrid Search (Vector + Keyword): Combining dense semantic retrieval with sparse keyword methods (BM25 or SPLADE) addresses both semantic understanding and lexical precision. Reciprocal Rank Fusion (RRF) merges the two ranked lists by computing a combined score from inverse ranks—documents ranking high on both lists get boosted, while documents strong on only one method still surface. RRF typically shows 15-30% better retrieval accuracy than pure vector search (Pinecone Research, 2024). This is now baseline for production systems.

Late Interaction (ColBERT): A middle ground between fast dense retrieval and slow cross-encoder reranking. ColBERT precomputes per-token embeddings for documents (enabling index-time storage) but performs late interaction at query time—comparing each query token against all document tokens to compute a similarity score. This yields near cross-encoder precision with near dense-retrieval latency. ColBERT v2 achieves state-of-the-art retrieval quality at 100x lower latency than cross-encoders on MS MARCO. Consider ColBERT when you need precision above hybrid search but can't afford full reranking latency.

Two-Stage Retrieval with Reranking: Initial hybrid search retrieves 50-100 candidates prioritizing recall. A cross-encoder model then reranks this smaller set, jointly evaluating query-document pairs for precise relevance scoring. Cohere Rerank 3.5 demonstrates 23.4% improvement over hybrid search alone on the BEIR benchmark (Cohere, 2024). The computational trade-off (slower cross-encoder inference) is justified by dramatically improved precision—typically reducing irrelevant passages from 30-40% to under 10%.

Query Transformation: HyDE (Hypothetical Document Embeddings) generates hypothetical answers using an LLM, then embeds those answers as search queries. The technique shows 20-35% improvement on ambiguous queries, particularly effective for specialized domains (Gao et al., 2023). Multi-query RAG generates 3-5 reformulated versions, performs parallel retrieval, and merges results—increasing recall for complex questions at cost of 200-500ms additional latency.

Parent Document Retrieval: Embed small chunks (400 tokens) for precise matching but retrieve larger parent documents (2000+ tokens) for generation. This preserves context while avoiding the problem where multiple top results all come from the same document, crowding out diversity.

GraphRAG: When Relationships Matter

Pure vector search struggles with questions requiring relationship understanding. "Which papers by authors at Stanford cited work from DeepMind researchers?" needs both semantic relevance and graph traversal.

GraphRAG models data as entities (people, companies, papers) and relationships, combining semantic search over text with graph queries. Multi-hop questions that defeat pure vector search become tractable. Some implementations report near-deterministic accuracy (99% precision) for relationship-driven queries by grounding retrieval in structured knowledge graphs.

When GraphRAG justifies complexity: Research paper databases with citation networks, organizational knowledge with complex reporting structures, legal document systems with precedent relationships, or any domain where "what is related to what" is as important as "what is similar."

Agentic RAG: Orchestrated Retrieval

Agentic RAG treats retrieval as one tool among many in an autonomous planning workflow. An AI agent analyzes complex queries, creates multi-step plans, selects appropriate retrieval strategies per step, executes the plan, and synthesizes findings.

Concrete example (Financial Research):

- Plan: Agent receives "Compare Apple's supply chain risk exposure vs. Microsoft over the last 2 quarters"

- Planning: Agent determines this requires (a) 10-K filings from SEC, (b) supply chain relationship graph, (c) recent news sentiment

- Retrieve: GraphRAG for supplier relationships, hybrid search for risk disclosures in filings, news API for sentiment

- Verify: Agent checks retrieved context covers both companies and relevant time period

- Synthesize: Combine sources into structured comparison

This represents the current sophistication frontier but introduces operational complexity. The shift from static pipeline to dynamic orchestration requires careful design: agents need clear tool boundaries, reliable verification mechanisms, and strategies to handle failure modes (e.g., what happens when the news API times out?).

When agentic complexity pays off: Financial analysis requiring real-time market data integration, complex legal research spanning multiple precedent types, or situations where retrieval strategy depends on query characteristics understood only at runtime.

Domain-Driven RAG: Application-Specific Architectures

RAG implementations must adapt to domain-specific challenges. The maturity progression isn't universal—different applications require different patterns.

Enterprise Knowledge Management

Challenge: Information silos across wikis, document management systems, SharePoint, databases, and collaboration tools. The goal is unified access to collective organizational knowledge without rebuilding all systems.

Key patterns:

- Intelligent routing: A classifier determines query domain (finance, HR, engineering, legal) and routes to specialized RAG applications tuned for specific knowledge bases. This improves precision by 30-40% over monolithic approaches searching all content.

- Metadata-heavy filtering: Enterprise data includes author, creation date, document type, access controls. Advanced systems leverage metadata extensively—both for retrieval relevance and security enforcement at the retrieval stage.

- Hybrid data source integration: Answering "What was our top-selling product in EMEA last quarter?" requires querying structured databases and internal APIs, not just document repositories. Architecture must support retrieving from relational databases, NoSQL stores, and APIs alongside text documents.

Customer Support Automation

Challenge: Fast, accurate, personalized answers 24/7 while reducing human support load. Reliability and trustworthiness are non-negotiable.

Key patterns:

- Curated knowledge bases: Performance depends almost entirely on underlying knowledge quality. Meticulously curated FAQs, product manuals, policies, and troubleshooting guides form the foundation. "Garbage in, garbage out" applies ruthlessly.

- Human-in-the-loop feedback: Production systems incorporate user rating mechanisms and escalation review by human agents. This feedback continuously refines the knowledge base, creating improvement cycles.

- Conversational memory: Storing conversation context enables understanding follow-up questions without repetition, personalizing interactions across multiple turns.

- Source citation: Showing users the specific document or policy supporting an answer builds trust and provides verification paths.

High-Stakes Complex Reasoning

Challenge: Legal research, medical diagnostics, financial analysis require synthesizing evidence from numerous sources with rigorous logical inference. Factual accuracy and auditable reasoning are mandatory.

Key patterns:

- Reasoning-augmented frameworks: Simple chain-of-thought prompting proves insufficient. Systems like CRP-RAG use reasoning graphs to model complex logical structures, exploring multiple reasoning paths and backtracking from dead ends based on retrieved evidence.

- Multi-agent systems: Complex financial analysis might deploy specialized agents: one retrieving real-time market data, another applying statistical inference, a third synthesizing findings into investment recommendations. This modular approach provides clear, auditable reasoning trails.

- Plan-then-Act-and-Review (PAR-RAG): Critical for multi-hop questions where early errors propagate. The system generates a global plan, executes each step (retrieve + generate intermediate result), and reviews output for errors before proceeding. This verification mechanism prevents error cascades.

The concept of a monolithic "retriever" becomes obsolete in advanced systems. Intelligent orchestration analyzes queries and routes to appropriate tools: GraphRAG for relationships, hybrid search for technical terms, API calls for real-time data.

Common Failure Modes and Debugging

Understanding where RAG systems fail helps design robust architectures. The three dimensions of RAG evaluation—retrieval quality, generation accuracy, and faithfulness to sources—each have distinct failure modes.

Chunking Strategy Failures

Symptom: Relevant information retrieved but context incomplete or incoherent.

Causes:

- Size-based chunking (every 500 tokens) severs contextual links within tables, lists, or logical sections

- No overlap between chunks means cross-chunk concepts get fragmented

- Headers and metadata separated from content they describe

Solutions: Structure-aware chunking preserving document elements (tables intact, lists complete, headers with relevant content). Overlapping chunks (10-20% overlap) ensure cross-boundary concepts get captured. Document-specific strategies: academic papers chunk by section, code documentation by function/class.

Retrieval vs. Generation Errors

Symptom: Wrong answers despite correct information in knowledge base.

Diagnosis: Distinguish whether retrieval failed (relevant documents not retrieved) or generation failed (relevant documents retrieved but LLM generates wrong answer).

Retrieval failures:

- Monitor relevance scores. If top-k results all have low similarity scores (< 0.6), query transformation (HyDE) or query expansion may help

- Check for exact term misses: if query contains specific product codes or technical jargon, hybrid search (keyword + vector) becomes critical

- Parent document retrieval: verify chunk size isn't too small—larger chunks provide better context even at slight recall cost

Generation failures:

- Verify retrieved context actually supports the question. An LLM evaluation prompt: "Does the provided context contain information to answer the question? Yes/No" identifies when retrieval succeeded but context is insufficient

- Check prompt engineering: explicit instructions ("Base your answer only on the provided context. If information is not present, state that clearly") reduce hallucination

- Consider reranking: if relevant documents are retrieved but ranked low (positions 8-15), reranking pushes them higher before LLM sees them

Embedding Model Mismatch

Symptom: Poor retrieval performance despite quality content and good chunking.

Causes: Embedding model trained on general web text performs poorly on specialized domains (legal, medical, financial). Domain-specific terminology gets embedded poorly.

Solutions:

- Domain-specific embedding models (e.g., specialized medical embeddings) when available

- Fine-tuning base embedding models on domain corpus (requires labeled query-document pairs)

- Hybrid search reduces dependency on embedding quality—keyword matching catches domain terms even when embeddings fail

Scale-Related Degradation

Symptom: System worked well with 10K documents, fails with 100K documents.

Causes:

- Vector database not properly indexed (brute-force search becomes prohibitively slow)

- Retrieval returns too many irrelevant results as corpus grows (larger haystack, same retrieval strategy)

- Re-ranking computational cost scales linearly with corpus size if applied naively

Solutions:

- Verify proper ANN indexing (HNSW, IVF) with appropriate parameters for scale

- Increase retrieval candidates (top-k from 20 to 50) then apply more aggressive reranking

- Metadata filtering before vector search dramatically reduces search space

- Consider hierarchical retrieval: classify query to domain, search only relevant subset

When NOT to Use RAG

RAG adds complexity and cost. Several scenarios favor simpler alternatives:

Long context windows (small corpus, low volume): Modern LLMs with 100K+ token contexts (Claude, GPT-4 Turbo) can ingest entire knowledge bases directly for small document sets. If total corpus fits in context window and query volume is low, RAG's retrieval machinery may be unnecessary overhead. But beware the cost trap: filling 128K tokens at $0.01/1K tokens costs $1.28 per query—viable for 100 queries/day, prohibitive at 10K queries/day. RAG retrieves 2K relevant tokens at $0.02 per query. At scale, RAG wins even when context windows could theoretically fit everything.

Static, rarely-updated content: If knowledge base changes monthly or quarterly and is relatively small (< 50 documents), fine-tuning a base model on that content may outperform RAG. The model internalizes knowledge, eliminating retrieval latency and complexity. Trade-off: updating requires retraining rather than re-indexing.

Exact match requirements: If all queries are exact lookups ("What is the price of SKU-12345?"), traditional search (Elasticsearch) or direct database queries outperform RAG. Vector similarity adds no value for exact matching.

Real-time dynamic data: If answers require live data (current stock prices, system status), API calls or database queries are direct solutions. RAG is for static knowledge retrieval, not real-time data access (though agentic RAG can combine both).

Highly structured query patterns: If 90% of queries follow predictable patterns ("Show me all invoices from Q3 for Customer X"), parameterized SQL or structured search outperforms semantic retrieval. RAG excels at natural language understanding, not structured data queries.

Implementation Strategy: Phased Approach

Successful enterprise RAG implementations follow progressive sophistication:

Phase 1: Foundation (Weeks 1-2)

- Select vector database based on decision matrix (likely pgvector for most initial deployments)

- Implement basic document parsing for primary content types (PDFs, HTML)

- Deploy hybrid search (vector + BM25) as baseline retrieval

- Use managed LLM API (GPT-4, Claude) to establish quality ceiling

- Build simple web interface for testing queries

Success criteria: Answer accuracy > 60% on curated test set, query latency < 2 seconds, successful ingestion of initial document corpus.

Phase 2: Enhancement (Weeks 3-6)

- Add cross-encoder reranking (Cohere Rerank or open-source BGE-Reranker)

- Implement query transformation (HyDE) for complex queries

- Develop content-specific parsing (structure-aware chunking for tables, academic papers)

- Deploy metadata filtering and domain routing if multi-domain knowledge base

- Establish evaluation framework using tools like RAGAS or TruLens for automated metrics (faithfulness, answer relevance, context precision)

Success criteria: Answer accuracy > 75%, retrieval precision > 80%, documented failure modes with mitigation strategies.

Phase 3: Production Hardening (Weeks 7-12)

- Scale testing: validate performance with 10x document volume

- Implement caching strategies (semantic caching for repeated queries)

- Develop monitoring and alerting for retrieval quality degradation

- Deploy human-in-the-loop feedback mechanisms

- Document operational runbooks for common issues

- Security and compliance measures if required

Success criteria: Production SLA met (uptime, latency), documented operational procedures, user feedback loop operational.

Phase 4: Advanced Capabilities (Months 4+)

- GraphRAG for relationship-heavy queries (if applicable)

- Agentic orchestration for complex multi-step queries

- Fine-tuned embedding models for domain optimization

- Multi-agent systems for specialized reasoning (if complexity justified)

Success criteria: Demonstrable ROI metrics, handling of complex queries that simpler systems fail, clear documentation of when advanced features provide value vs. complexity.

Cost Architecture and Unit Economics

RAG economics come down to cost per query and how that compares to alternatives.

Unit economics comparison (1M queries/month, GPT-4 pricing):

The cost argument for RAG vs. long context windows is compelling: filling a 128K context window costs ~$0.40 in input tokens alone. Retrieving 2K relevant tokens costs ~$0.006. At scale, that's roughly a 60x difference in input costs.

Key cost drivers:

- LLM inference: Dominates costs at scale (60-80% of total)

- Vector database: $5K-25K/month managed, or infrastructure costs self-hosted

- Reranking: $0.001-0.002 per query (cross-encoder inference)

- Embedding generation: One-time ingestion cost, negligible for retrieval

Optimization strategies:

- Semantic caching: Cache responses for semantically similar queries (cosine > 0.95). 20-40% cost reduction for repetitive query patterns.

- Model tiering: Route simple queries to GPT-3.5 ($0.002/1K tokens) or open models; reserve GPT-4 ($0.03/1K) for complex reasoning

- Efficient reranking: Rerank top 10-20 candidates, not all retrieved documents

- Batch embedding: Process document updates in batches during off-peak hours

Conclusion: Architecture Over Technology

The vector database you choose matters less than understanding when pgvector suffices versus requiring purpose-built infrastructure. Hybrid search plus reranking is now baseline—naive RAG fails in production. Domain-specific architectures (enterprise knowledge management, customer support, high-stakes reasoning) require different patterns.

Success depends on:

- Matching sophistication to requirements: Don't deploy GraphRAG when hybrid search solves the problem

- Understanding failure modes: Distinguish retrieval failures from generation failures

- Phased implementation: Establish foundation before adding complexity

- Measuring what matters: Track answer accuracy, retrieval precision, and operational metrics—not just vector database benchmarks

The field evolved from "can we make this work?" (2023) to "which patterns fit our use case?" (2025). The maturity progression—naive → advanced → graph-augmented → agentic—isn't chronological. It's a decision framework. Most enterprise applications need advanced RAG (hybrid + reranking). Some justify GraphRAG complexity. Few require agentic orchestration. Knowing which tier matches your requirements separates effective implementations from over-engineered solutions.

References

Cohere (2024). "Rerank 3.5: State-of-the-art Retrieval for Enterprise Search." https://cohere.com/blog/rerank-3-5

DataCamp (2025). "The 7 Best Vector Databases in 2025." https://www.datacamp.com/blog/the-top-5-vector-databases

Gao, L. et al. (2023). "Precise Zero-Shot Dense Retrieval without Relevance Labels." ACL 2023. https://aclanthology.org/2023.acl-long.99/

Microsoft (2024). "Integrated Vector Database - Azure Cosmos DB." Microsoft Learn. https://learn.microsoft.com/en-us/azure/cosmos-db/vector-database

Neo4j (2024). "Advanced RAG Techniques for High-Performance LLM Applications." https://neo4j.com/blog/genai/advanced-rag-techniques/

Northflank (2024). "PostgreSQL Vector Search Guide: Everything You Need to Know About pgvector." https://northflank.com/blog/postgresql-vector-search-guide-with-pgvector

Pinecone Research (2024). "Hybrid Search: Combining Vector and Keyword Search." https://www.pinecone.io/learn/hybrid-search/

Squirro (2025). "RAG in 2025: Bridging Knowledge and Generative AI." https://squirro.com/squirro-blog/state-of-rag-genai

Further Reading

- LLM Application Lifecycle — Production patterns for LLMOps

- PDF Parsing — Document extraction for RAG pipelines

This guide synthesizes production experience from enterprise RAG deployments across knowledge management, customer support, and high-stakes research applications.