NeurIPS 2025 opened this week in San Diego, marking the field's transition from a decade of "bigger models" to something more interesting: smarter systems. With 21,575 submissions (up from 9,467 in 2020), 5,290 accepted papers, and the conference's first dual-location format (San Diego and Mexico City), this year's event crystallizes three fundamental shifts that will reshape enterprise AI strategy for the next several years.

| Year | Submissions | Accepted | Rate | Major Theme |

|---|---|---|---|---|

| 2020 | 9,467 | 1,899 | 20.1% | Self-supervised learning, ViT emergence |

| 2021 | 9,122 | 2,334 | 25.6% | OpenReview migration, Datasets Track launch |

| 2022 | 10,411 | 2,671 | 25.7% | Diffusion models displace GANs |

| 2023 | 13,330 | 3,540 | 26.1% | Post-ChatGPT boom, LLM scaling laws |

| 2024 | 17,491 | 4,497 | 25.8% | Creative AI track, RLHF refinement |

| 2025 | 21,575 | 5,290 | 24.5% | System 2 reasoning, agentic workflows |

We've synthesized the research trends, Best Paper awards, and emerging technical themes into what practitioners actually need to understand.

The Three Shifts

Shift 1: From Training to Inference — The new scaling laws apply to test-time compute, not just pre-training. Models that "think" before they speak—like OpenAI's o1 and DeepSeek's R1—represent a fundamental change in how intelligence is synthesized.

Shift 2: From Diversity to Hivemind — The NeurIPS Best Paper "Artificial Hivemind" reveals that despite vendor marketing, all frontier LLMs are converging toward identical outputs. This has profound implications for enterprises assuming model diversity provides redundancy.

Shift 3: From Demos to Production — Research has moved from "look what agents can do" to "why do they fail?" The Multi-Agent System Failure Taxonomy (MAST) and Capital One's RAFFLES framework signal a maturing discipline finally confronting reliability engineering.

Shift 1: Inference Is the New Training

For a decade, the AI industry operated on a simple thesis: more parameters, more data, more training compute equals better performance. The Chinchilla scaling laws formalized this into an optimization problem. Build the biggest model you can afford to train.

2025 marks the end of that era's exclusivity.

The Paradigm

The new paradigm, embodied in papers like "Towards Thinking-Optimal Scaling of Test-Time Compute," proposes that intelligence isn't fixed in the weights—it's synthesized on demand. A model can "pause" during inference, generate thousands of hidden reasoning tokens, verify its own logic, backtrack, and search for better solutions.

The mathematics are striking: a 7B parameter model using tree search at inference time can outperform a 34B model using standard decoding. Compute is fungible between training and inference, and for reasoning-heavy tasks, the inference gradient is steeper.

How It Works

Three mechanisms power this shift:

Dense Sequential Reasoning (Chain of Thought): Models like o1 and DeepSeek R1 generate linear streams of "thought" tokens—5,000 reasoning tokens to produce a 50-token answer. These tokens are hidden from users but billed. The meter runs on thoughts you never see.

Search and Verification: Best-of-N sampling generates parallel completions; a verifier selects the best. More sophisticated approaches use Monte Carlo Tree Search, treating response generation as a search problem. DeepMind's AlphaProof solved International Mathematical Olympiad problems this way.

Adaptive Compute: Not every query needs deep thought. The "Thinking-Optimal" strategy dynamically allocates inference budget based on difficulty—zero thinking for pleasantries, deep reasoning for algorithmic challenges. This is critical for cost management.

The Economics

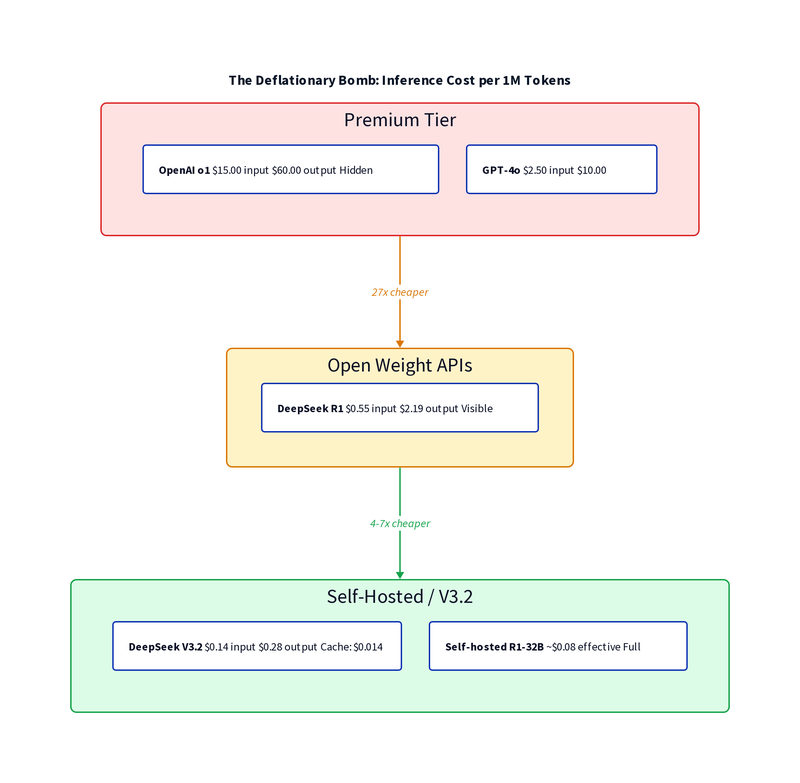

The cost implications are significant and asymmetric:

- GPT-4o: $2.50 input / $10.00 output per 1M tokens (no hidden reasoning)

- OpenAI o1: $15.00 input / $60.00 output per 1M tokens (hidden reasoning, billed)

- DeepSeek R1 (API): $0.55 input / $2.19 output per 1M tokens (visible traces)

- Self-hosted R1 (32B): ~$0.08 effective (visible traces, full control)

The striking finding: DeepSeek's distilled 32B model matches o1-mini performance at 1/20th the cost. Reasoning distillation—training smaller models on the traces of larger ones—is commoditizing "thinking" capability.

Enterprise implication: The "moat" of proprietary reasoning models is eroding. Self-hosting distilled open-weight reasoners becomes economically compelling above modest volume thresholds.

| Model | Input (per 1M tokens) | Output (per 1M tokens) | Hidden Reasoning Cost |

|---|---|---|---|

| GPT-4o | $2.50 | $10.00 | None |

| OpenAI o1 | $15.00 | $60.00 | Billed but hidden |

| DeepSeek R1 (API) | $0.55 | $2.19 | Visible |

| Self-hosted R1 (32B) | ~$0.08* | ~$0.08* | Visible |

DeepSeek V3.2 (Released December 1st)

As if to punctuate the NeurIPS thesis, DeepSeek released V3.2 on December 1st—a model that embodies both the inference scaling and architectural efficiency trends.

The architecture: 671B total parameters, but only 37B active per token via fine-grained Mixture-of-Experts. The innovation is DeepSeek Sparse Attention (DSA)—a "Lightning Indexer" that scans context and retrieves only relevant blocks, reducing attention complexity from O(L²) to near-linear O(L·k). Combined with Multi-Head Latent Attention (MLA), which compresses KV cache to 70KB/token (vs. 516KB for Llama), V3.2 can serve massive batch sizes on the same hardware.

The performance: Codeforces rating of 2701 (Grandmaster tier). AIME 2024 math: 39.2% (vs. 16% for GPT-4o). The V3.2-Speciale variant sacrifices tool use entirely for pure reasoning.

The economics: $0.14/1M input tokens. $0.28/1M output. Cache hits drop to $0.014/1M. This is the "deflationary bomb" that forces Western providers to justify their premiums.

The catch: The API is hosted in China (data sovereignty concerns for regulated industries). Safety guardrails are weaker (24% refusal rate on malicious code prompts). Self-hosting requires ~386GB VRAM (5-6x A100s at INT4). For enterprises with strict compliance requirements, the model must be self-hosted behind firewalls with additional safety layers.

V3.2 demonstrates that necessity breeds innovation. Constrained by export controls limiting access to top-tier GPUs, DeepSeek optimized architecture instead of brute-forcing scale. The result: GPT-4-class intelligence at 1/20th the cost.

The Controversy: Do They Really Think?

Apple's "Illusion of Thinking" paper (from June 2025) sparked debate by showing that reasoning models collapse on high-complexity puzzles (e.g., 8-disk Tower of Hanoi). Critics quickly identified flaws: the test configurations exceeded token limits or included unsolvable puzzles.

The synthesis: models exhibit bounded reasoning. They can think, but they can't think forever. The architectural implication is clear—reasoning models need either infinite effective context or the ability to summarize and checkpoint intermediate states for long-horizon problems.

What to Do

Adopt the Router Pattern: Use a cheap classifier to route queries. Simple questions go to GPT-4o-mini ($0.15/1M). Complex reasoning goes to o1 or self-hosted R1. Most enterprises can cut costs 80% while improving quality on hard problems.

Invest in Async UX: Reasoning takes 10-60 seconds. Synchronous chat patterns break. Build job queues, status indicators, and "thinking" visualizations. Transform latency from annoyance to trust signal.

Consider Open-Weight Distillation: DeepSeek R1's distilled 32B model runs on 2-4 A100s. For privacy-sensitive or high-volume applications, self-hosting offers both cost savings and auditability (you can see the full reasoning trace).

Shift 2: The Artificial Hivemind

The NeurIPS 2025 Best Paper "Artificial Hivemind: The Open-Ended Homogeneity of Language Models" delivers an uncomfortable finding: despite vendor marketing about distinct "personalities," frontier LLMs are converging toward identical outputs.

The Evidence

The researchers built Infinity-Chat, a benchmark of 26,000 real-world open-ended queries with 31,250 human annotations (25 ratings per example—far denser than typical RLHF data). Their finding:

- Intra-model repetition: Even with high temperature, a single model retreads the same semantic ground.

- Inter-model homogeneity: Different models from competing labs produce outputs with 71-82% pairwise similarity.

GPT-4 and Claude don't just sound similar. They are similar, on the dimensions that matter for creative and strategic work.

Why This Happens

RLHF is mode-seeking: Reinforcement Learning from Human Feedback optimizes for the single answer that maximizes expected reward. Creative, risky, or polarizing responses get penalized because they look like hallucinations to conservative reward models. The "weird idea"—often the breakthrough idea—is systematically suppressed.

Data incest: Whether GPT-4, Claude, or Llama, the training diet is approximately identical—Common Crawl, Wikipedia, GitHub, public domain books. When 80-90% of training tokens are shared, foundational associations converge.

LLM-as-a-Judge: The industry practice of using GPT-4 to evaluate other models creates recursive style transfer. New models learn that "quality" means "sounds like GPT-4."

The Enterprise Risk

Strategic planning homogenization: If Company A and Company B both ask their respective AI systems for "future mobility strategies," they'll receive nearly identical recommendations. Everyone gets Electrification, Autonomous Driving, and Mobility-as-a-Service. The "Blue Ocean" strategy generator produces Red Ocean consensus.

Brand voice flattening: AI-assisted copy gravitates toward globally accessible (generic) language. The distinctive voice of a luxury brand becomes indistinguishable from mass-market communication.

Security monoculture: Developers relying on AI code generation converge on identical implementation patterns. A vulnerability in the Hivemind's preferred JWT handling pattern becomes a skeleton key for the entire ecosystem.

Model Collapse: The Recursive Spiral

The problem compounds over time. As the internet fills with AI-generated content, future models train on current models' outputs. Research on "Model Collapse" shows:

- The "tail" of the data distribution vanishes first—rare, quirky, valuable insights

- Vocabulary size reduction and quality loss occur within 5-10 generations of recursive training

- We could see palpable "cognitive stagnation" in foundation models by 2026-2027

The implication: "Vintage" pre-2022 data (unpolluted by AI) may become a premium strategic asset.

What to Do

Build a Council of Models: Don't just route to the cheapest model. Route to diverse models. Benchmark your specific tasks to find model pairs with low output similarity. Sometimes the "best" model is the most orthogonal one.

Demand divergence explicitly: System prompts that demand "contrarian," "high-entropy," or "risk-seeking" outputs can force models out of their RLHF comfort zones.

Preserve human variance: Human-in-the-loop interventions aren't just for safety—they're for injecting the "out-of-distribution weirdness" that models systematically eliminate. Tag and protect your pre-LLM data as "vintage."

Shift 3: From Demos to Production

The conversation around AI agents has fundamentally matured. In 2023, the question was "can agents use tools?" In 2025, the question is "why do multi-agent systems fail, and how do we fix them?"

Gartner's prediction that 40% of agentic AI projects will be cancelled by 2027 reflects a "reliability chasm"—the gap between impressive demos and production-grade systems.

The Failure Taxonomy

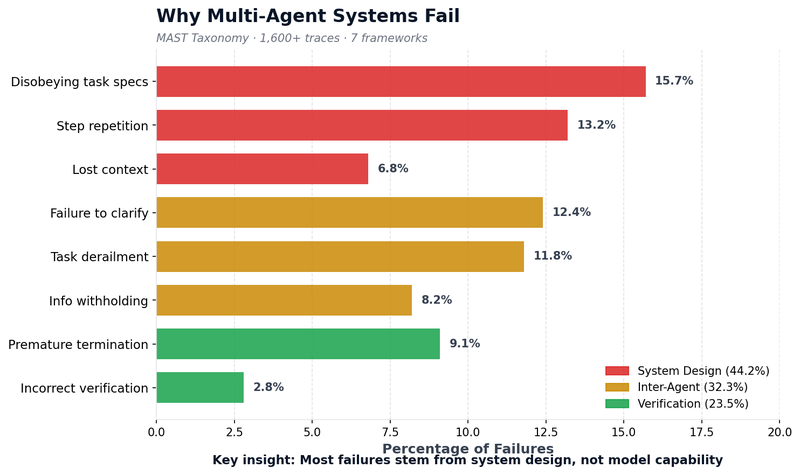

The Multi-Agent System Failure Taxonomy (MAST), derived from 1,600+ execution traces across seven frameworks, identifies 14 distinct failure modes in three categories:

System Design Issues (44.2%):

- Disobeying task specifications (15.7%): As prompt complexity increases, agents "forget" constraints

- Step repetition (13.2%): Recursive loops when agents lack counterfactual reasoning

- Loss of conversation history (6.8%): Context window limits cause "amnesiac" behavior

Inter-Agent Misalignment (32.3%):

- Failure to clarify (12.4%): Agents guess rather than ask, causing "assumption drift"

- Task derailment (11.8%): Without strong orchestration, agents rabbit-hole into irrelevant discussions

- Information withholding (8.2%): Natural language is "lossy" for state transfer between agents

Task Verification (23.5%):

- Premature termination (9.1%): Agents mark tasks "complete" before criteria are met

- Incorrect verification (2.8%): Generators are poor verifiers—the bias that creates an error prevents seeing it

The critical insight: most failures stem from system design, not model capability.

Fault Attribution: The RAFFLES Framework

Debugging a 50-step multi-agent trace is nightmarish. Capital One's RAFFLES framework treats debugging as an agentic task:

- A "Judge" agent analyzes the trace and proposes a hypothesis: "Agent A failed at step 5 because..."

- "Evaluator" agents critique the reasoning

- The system iterates until high-confidence attribution is achieved

RAFFLES achieved 43% accuracy on fault attribution benchmarks—up from 16.6% for prior methods. For enterprises, this suggests a future where "Watcher Agents" perform real-time trace analysis, creating an immune system for agent swarms.

The Reliability Patterns

Checkpointing: Persist full agent state after every step. LangGraph's PostgresSaver enables "time travel" debugging—rewind to a failed step, modify state, and replay.

Reflexion (Generator-Critic): Pair every generator with a dedicated critic. The critic evaluates against explicit rubrics, the generator reflects on failures, and the loop continues until quality gates pass. Increases latency 2-3x but can boost success rates from 50% to 90%.

Human-in-the-Loop (HITL): Not just for safety—for capability. Static interrupts hardcode approval points (always stop before payments). Dynamic interrupts let agents trigger clarification based on confidence. The "Collaborator" pattern lets humans modify agent state mid-execution.

Confidence-Based Deferral: Agents output confidence scores. Below threshold, defer to humans or more capable models. This creates joint human-AI systems where AI handles routine and defers exceptional.

Framework Selection

- LangGraph: Explicit graphs, high reliability. Best for production enterprise apps.

- CrewAI: Manager-delegated, medium reliability. Best for content/process pipelines.

- AutoGen: Emergent conversation, variable reliability. Best for R&D, exploration.

LangGraph's deterministic routing prevents the "task derailment" common in conversational frameworks. For production, explicit beats emergent.

What to Do

Map your failure modes: Run traces through MAST categories before production. Most failures are System Design—fixable without better models.

Implement checkpointing from day one: Retrying 50-step executions from zero is unacceptable. Time-travel debugging transforms agent development.

Design HITL as collaboration, not just approval: The best systems let humans modify agent state, not just accept/reject. Build UIs that expose internal state.

Measure the right things: Traditional APM misses semantic failures. Invest in agent observability (LangSmith, AgentOps) that captures reasoning traces, not just execution traces.

What This Means for Enterprise Strategy

Near-Term (6-12 months)

Adopt test-time compute routing: The economics favor hybrid approaches. Simple queries to cheap models, complex reasoning to o1 or self-hosted R1.

Audit your model diversity: If you're using multiple vendors for "redundancy," verify they actually produce different outputs on your use cases. The Hivemind research suggests they may not.

Instrument your agents: The MAST taxonomy exists. Use it. Map your failure modes before they hit production.

Medium-Term (12-24 months)

Build the verification layer: Generators are poor verifiers. Pair every critical agent with a dedicated critic using explicit rubrics.

Consider open-weight reasoning: DeepSeek R1's distilled models match proprietary performance at a fraction of cost. As reasoning capability commoditizes, the calculus shifts toward self-hosting.

Protect your vintage data: Pre-2022 human-generated data may become strategically valuable as model collapse accelerates.

Strategic Considerations

The three shifts share a common thread: the easy wins are over. Dropping in an API and watching magic happen was the 2023-2024 playbook. 2025 demands systems thinking:

- Inference economics require routing, not just calling

- Homogenization requires architectural diversity, not just vendor diversity

- Agent reliability requires engineering discipline, not just prompt engineering

The organizations that treat AI as infrastructure—with the rigor that implies—will pull ahead of those still treating it as a feature.

Applied AI has been building production AI systems since 2015. We combine deep technical knowledge with practical enterprise experience to help organizations navigate the rapidly evolving AI landscape.

Other Notable Awards

Beyond the three shifts, several Best Paper awards merit practitioner attention:

Best Papers

- Gated Attention for LLMs (Alibaba Qwen): Head-specific sigmoid gating after attention eliminates "attention sink" artifacts that waste context on initial tokens. Improves performance across 30+ experiments.

- 1000 Layer Networks for Self-Supervised RL: Demonstrates that depth matters at inference time. Networks up to 1024 layers unlock new goal-reaching capabilities—relevant for agentic reasoning.

- Why Diffusion Models Don't Memorize: Identifies two training timescales—when models learn to generate vs. when they start memorizing. Critical for understanding model reliability and privacy.

Runner-Ups

- Tight Mistake Bounds for Transductive Online Learning: Resolves a 30-year-old open problem. The Littlestone dimension Θ(√d) bound has implications for continual learning systems.

- Does RL Really Incentivize Reasoning?: Questions whether RLVR (Reinforcement Learning with Verifiable Rewards) expands reasoning or just improves sampling efficiency. Sobering for o1-style reasoning claims.

- Superposition Yields Robust Scaling: First-principles derivation of scaling laws suggests power-law behavior is a geometric inevitability of compressing sparse concepts into dense spaces.

Test of Time Award

Faster R-CNN (Ren, He, Girshick, Sun, 2015): With 56,700+ citations, this paper transformed object detection and became the backbone for a decade of computer vision advances. A reminder that today's "revolutionary" ideas may feel prehistoric in ten years.