We observe a consistent pattern in stalled AI initiatives: the technology works, but the strategic target is off. Teams often purchase solutions before diagnosing the problem, or chase moonshots while overlooking the compound value of smaller improvements.

While AI adoption has surged, the number of projects successfully scaling beyond pilot remains stubbornly low. This isn't a technology problem—it's a strategy problem. Organizations confuse AI procurement with AI transformation.

This article presents a decision framework for enterprise AI based on patterns we've observed across regulated industries and mid-market organizations. It's not a visionary roadmap. It's a diagnostic tool.

The Readiness Problem

Most organizations approach AI backwards. They select a use case, assemble a team, acquire data, and build a pilot. When the pilot fails to scale, they blame data quality or talent shortages.

The real problem starts earlier: they never assessed whether the organization was ready for the change AI requires.

The AI Readiness Pyramid

Think of AI readiness as a pyramid with five layers. Most organizations attempt to build top-down—mandating cultural transformation while data infrastructure remains broken.

Each layer must support the one above. Culture requires process. Process requires skills. Skills require infrastructure. Infrastructure requires data.

Most failed AI initiatives skip levels 1-3 and try to jump straight to 4-5.

A financial services firm we worked with discovered they couldn't answer basic questions about customer data consistency across CRM, billing, and support systems. They had invested $2M in a machine learning platform that sat unused because no one could reliably pull training data. The platform wasn't the problem. The foundation was.

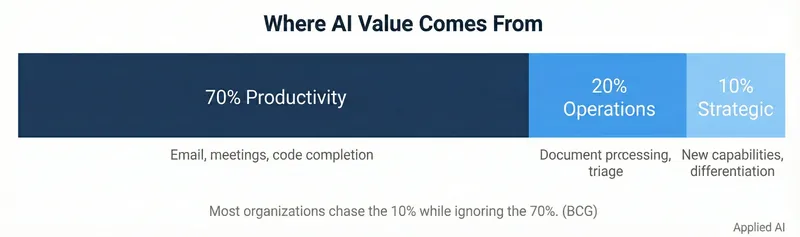

The Value Distribution Principle: 10-20-70

BCG research reveals something counterintuitive about how AI value distributes across an organization: 10% moonshots, 20% operational improvements, 70% small productivity gains.

Companies get this backwards. They hunt for the transformative use case—the AI that will revolutionize their business model—while ignoring the compound effect of making every knowledge worker 10-15% more productive.

What the 70% Looks Like

The seventy percent isn't sexy. It's AI that:

- Auto-categorizes support tickets so agents spend less time routing

- Pre-fills insurance claim forms from uploaded documents (20 minutes saved per claim)

- Suggests relevant contract clauses to legal teams

- Flags anomalous transactions for fraud review

None of these generate press releases. Collectively, they reduce operational costs 15-20% and free thousands of hours for higher-value work.

Case Study: A mid-market insurance carrier implemented this approach. Instead of building a revolutionary claims AI, they deployed six small automation projects over eight months:

| Project | Investment | Annual Savings | Time to First Value |

|---|---|---|---|

| Document classification for intake | $120K | $480K | 6 weeks |

| Damage photo analysis for auto claims | $150K | $520K | 8 weeks |

| Prior authorization lookup | $100K | $380K | 4 weeks |

| Email response suggestions | $130K | $620K | 5 weeks |

| Fraud pattern flagging | $180K | $720K | 10 weeks |

| Policy renewal prediction | $120K | $480K | 8 weeks |

| Total | $800K | $3.2M | — |

Note: Savings figures are gross annual value. Actual ROI depends on ongoing maintenance costs (typically 15-25% of initial investment annually) and operational overhead. Net savings after Year 1: approximately $2.6-2.8M.

None required bleeding-edge technology. All used commercial tools configured for their specific processes. The innovation was strategic, not technical: optimizing for volume of impact, not sophistication of solution.

The 20%: Operational Transformation

The twenty percent represents process-level improvements: fundamentally changing how work gets done. These projects require rethinking workflows, not just speeding them up.

Intelligent Document Processing (IDP) falls here. It doesn't just make invoice processing faster—it eliminates the invoice processing queue entirely. Documents flow from receipt to validation to payment without human intervention until exception handling.

The 10%: Strategic Differentiation

The moonshots—the ten percent—create new capabilities or business models. Predictive equipment maintenance that prevents failures before sensors detect problems. Self-healing supply chains. Autonomous compliance monitoring.

These earn the investment, but only after the foundation is solid. Trying to build autonomous systems when you can't reliably extract data from PDFs is expensive failure.

The Governance Paradox: Friction Creates Speed

Governance isn't a tax on AI—it's the enabler of AI at scale.

As of 2025, we see more AI projects stall due to governance and compliance issues than technical problems. Legal blocks initiatives because bias wasn't tested. Compliance rejects systems because audit trails don't exist. Security shuts down models that could leak sensitive data.

Organizations that treat governance as an afterthought see projects stall in legal review. Organizations that design governance into the process from day one move faster—because they're building systems that can actually be deployed.

The Regulatory Reality

Three frameworks now dominate:

EU AI Act: Risk-based tiers from "banned" to "minimal oversight." High-risk systems (recruitment, credit scoring, medical devices) face stringent requirements. Non-compliance penalties reach €35M or 7% of global turnover.

NIST AI Risk Management Framework: Voluntary in the US but increasingly expected by federal contractors. The July 2024 Generative AI profile explicitly targets risks like hallucination and harmful content.

ISO 42001: First certifiable international standard for AI management systems. Creates continuous Plan-Do-Check-Act cycle. Certification provides verifiable proof of governance maturity.

These aren't alternatives—they're complementary. EU AI Act defines legal requirements. NIST provides risk management process. ISO 42001 offers management system framework.

What This Means Practically

A healthcare AI project we evaluated spent 40% of its budget on governance and compliance—not building the model, but proving it was safe, fair, and auditable. This wasn't overhead. It was the cost of operating in a regulated industry. Organizations that skip this step don't save 40%—they lose 100% when their project can't deploy.

For high-risk systems, expect:

- Formal impact assessments before development

- Data lineage documentation (where every training sample came from)

- Model cards explaining capabilities and limitations

- Audit trails for every decision

- Regular bias testing and mitigation

- Human oversight mechanisms

The organizations winning at AI aren't the ones avoiding governance. They're the ones who've made governance so efficient it becomes competitive advantage.

Build vs Buy vs Partner

The most strategic decision in enterprise AI isn't which algorithm to use. It's what to own.

The Decision Framework

Build when:

- The capability creates competitive differentiation

- You have unique data or domain requirements

- The cost of vendor dependency exceeds development cost

Buy when:

- The capability is commodity (HR automation, expense processing)

- Time-to-value matters more than customization

- Continuous vendor improvement exceeds what you could deliver

Partner when:

- You need capabilities faster than you can build

- The domain requires specialized expertise

- You want to de-risk exploration before committing

The Hybrid Pattern

The emerging pattern is "buy the platform, build the brains." Use commercial infrastructure for hosting, monitoring, logging, orchestration. Retain control over model definitions, system prompts, and tool integrations that constitute your intellectual property.

For GenAI specifically, this often means:

- Buy: Foundation model access (GPT-4, Claude) via API

- Build: Retrieval pipelines (RAG), evaluation harnesses, domain-specific prompts

- Platform: LangSmith, Azure AI Studio, or similar for orchestration and observability

This minimizes undifferentiated heavy lifting while maximizing control over what differentiates your business.

Cautionary Tale: A manufacturing company spent 18 months building custom predictive maintenance. Two months after deployment, their equipment vendor released an AI module trained on data from 10,000+ installations globally. Their internal model was trained on 200 machines. The vendor's model was objectively better, continuously updated, and cost less than their internal team's salary.

They had built when they should have bought.

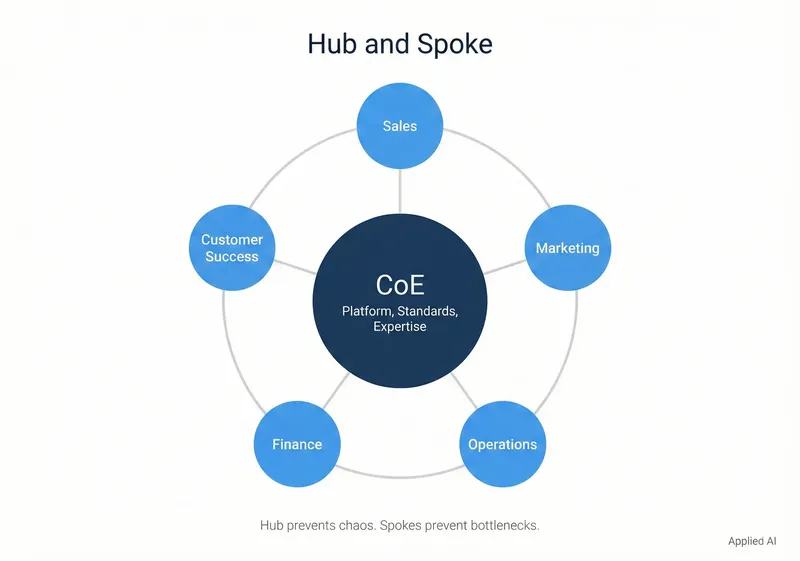

Operating Models: Hub-and-Spoke

How you organize AI capability determines what you can build.

Most organizations start decentralized: different business units experimenting independently. This creates agility but leads to duplicated effort, inconsistent tools, and solutions that can't scale. Every pilot becomes a one-off.

The natural reaction is centralization: create a Center of Excellence that standardizes tools and governs projects. This solves duplication but creates a bottleneck. All projects flow through the central team, which can't move fast enough to support every business need.

The mature model is federated hub-and-spoke:

The Hub (centralized AI team):

- Builds and maintains core AI platform

- Sets enterprise-wide standards (governance, security, ethics)

- Provides specialized expertise

- Runs high-priority strategic initiatives

The Spokes (embedded teams):

- AI specialists embedded directly in business units

- Deep domain knowledge of specific functions

- Build solutions using hub-provided platforms and standards

- Report to business unit leaders with dotted line to central AI team

This balances governance with agility. The hub prevents chaos. The spokes prevent bottlenecks.

The AI Champion Model

An alternative for the 70%: distributed champions within each department—domain experts with enough technical literacy to identify opportunities, work with central resources, and drive adoption.

A logistics company trained 40 champions across regional operations. Within six months: 15 small AI projects deployed, identified by champions rather than central innovation team. Projects ranged from route optimization to customer communication automation. Average project cost: $50K. Average payback: 4 months.

Champions turn AI from a central initiative into a distributed capability.

The Failure Modes

Understanding failure patterns is more valuable than studying success stories.

Pilot Purgatory

Projects show promise in controlled settings but never reach production.

- Designed for demo, not deployment: Used clean data that doesn't reflect production reality

- No business sponsor: IT-led initiatives without business ownership

- Underestimated "last mile" complexity: Ignored integration with legacy systems

Fix: Every pilot requires executive business sponsor, production deployment plan, and pre-defined success metrics before funding.

The Talent Trap

Organizations treat AI talent shortage as a hiring problem. They compete for scarce ML engineers, lose bidding wars to tech giants, and still can't fill positions.

The better approach: develop talent internally. The acute shortage isn't in coding; it's in "translation"—combining domain expertise with technical literacy—people who can identify where AI adds value and work with specialists to implement it. These roles can't be hired. They must be grown.

Data Optimism

Teams assume data will be "good enough" and discover fundamental quality issues after building models.

A supply chain optimization project built sophisticated demand forecasting before discovering product categorization was inconsistent across regions. Same item, different SKUs, different hierarchy classifications. The models couldn't learn meaningful patterns because input data was incoherent.

They spent eight months on data cleanup before using models built in month three.

Fix: Data readiness assessment before any model development. Verify quality, accessibility, governance. Unsexy. Critical. Non-negotiable.

Putting It Into Practice

The path from strategy to execution follows a consistent sequence. Organizations that succeed typically work through four questions:

1. Are you ready? Structured readiness assessment across data, infrastructure, skills, process, and culture. We identify gaps and sequence remediation.

2. Where do you start? Use case prioritization based on business value, technical feasibility, and organizational readiness. We bias toward the 70%.

3. How do you govern? Governance framework design aligned with EU AI Act, NIST RMF, and ISO 42001. Compliance as a design input, not a deployment blocker.

4. How do you scale? Operating model design and talent development strategy. The organizational muscle to go from one-off pilots to systematic capability.

The Real Strategic Question

Enterprise AI strategy isn't about choosing technologies. It's about answering a simpler question: Are we building organizational capability or running experiments?

Experiments are valuable. They teach you what's possible. But they don't scale, don't compound, and don't create competitive advantage.

Capability building looks different:

- Systematic assessment and remediation of readiness gaps

- Disciplined prioritization based on value and feasibility

- Governance designed in, not bolted on

- Operating models that balance control and agility

- Talent development that grows capability internally

- Metrics that track realized value, not potential impact

The organizations that will win aren't those with the most sophisticated AI. They're the ones that built the foundations to deploy hundreds of AI initiatives reliably, safely, and at speed.

That's not a technology strategy. It's a transformation strategy.

References

BCG/Henderson Institute (2024). "How Generative AI Is Reshaping Enterprise Value Creation."

Deloitte (2024). "State of Generative AI in the Enterprise."

European Commission (2024). "EU Artificial Intelligence Act: Complete Guide."

ISO (2023). "ISO/IEC 42001:2023 Artificial Intelligence Management System."

McKinsey (2024). "The State of AI: How Organizations Are Rewiring to Capture Value."

NIST (2024). "AI Risk Management Framework: Generative AI Profile." NIST AI 600-1.

Further Reading

- AI Adoption & Enablement — Practitioner playbook for driving adoption

- LLM Application Lifecycle — Production patterns for LLMOps

This framework synthesizes patterns from 50+ enterprise AI engagements across financial services, healthcare, manufacturing, and logistics.