While Netflix and Uber have normalized continuous model deployment and autonomous decisioning, most enterprises in regulated sectors still rely on quarterly batch predictions. The latency gap has shifted from a technical debt issue to an existential economic one.

Netflix drives 20% engagement increases through continuous model deployment. Uber processes 10 million predictions per second through Michelangelo. NAB achieves 50% lift in conversion rates through real-time decisioning. Meanwhile, most enterprises run quarterly batch models that predict who will churn without answering when to intervene or which intervention will work.

The difference isn't the models. It's everything around them.

This article maps the evolution from correlation-based prediction toward the emerging frontier of autonomous optimization. We examine why the industry's historical focus on predictive accuracy misses the point, how causal inference fundamentally changes the economics of customer intervention, and why MLOps maturity—not model sophistication—determines who captures value from AI investments.

The Three-Stage Evolution

Customer analytics is undergoing a structural transformation. The techniques that drove competitive advantage five years ago are now table stakes. The frontier has moved—and continues moving.

Stage 1: Causal Inference

The first transition moves from correlation to causation. Traditional response models answer: "Who will churn?" or "Who will convert?" These questions are necessary but insufficient. They cannot distinguish between customers who converted because of a marketing treatment and those who would have converted anyway.

This matters financially. A standard churn model identifies at-risk customers. A basic retention system applies a rule: "For customers with churn score above 80%, send a 20% discount." This approach is demonstrably wasteful. As Causal ML for Marketing details, customers segment into four distinct response patterns:

- Persuadables: Will stay only if contacted—the true lift from any campaign

- Sure Things: Will stay regardless—targeting them wastes resources

- Lost Causes: Will leave regardless—resources wasted

- Sleeping Dogs: Will churn because you contacted them—targeting actively harms the business

A truly sophisticated system asks a different question: "What is the incremental impact of this specific offer on this specific customer's probability of staying?" Implementations of this approach have yielded 18% ROI improvement and 27% targeting efficiency gains over traditional static models (Devriendt et al., 2021).

The methodology behind the segments: These four personas emerge from causal ML meta-learners (T-Learners, X-Learners, or Causal Forests) trained on randomized experiment data. The models estimate individual-level treatment effects—not just "will they churn" but "will they churn differently if we contact them." See Pillar 08 for detailed methodology.

The shift requires infrastructure most organizations lack: proper A/B testing culture, holdout groups maintained over time, and the organizational discipline to measure incrementality rather than just response.

Stage 2: Prescriptive Decisioning

The second transition moves from insight to action. Knowing who to target and what works isn't enough. The question becomes: "What specific action should we take with this specific customer at this specific moment?"

This is the domain of Next-Best-Action (NBA) engines. Forrester identifies Pegasystems' Customer Decision Hub as the "gold standard" for enterprise Real-Time Interaction Management (Forrester Wave: RTIM, 2024). The system processes real-time data streams, arbitrates between thousands of potential actions, and delivers a single optimized recommendation in under 200 milliseconds.

The results from production deployments:

- National Australia Bank: 50% lift in conversion rates after implementing real-time decisioning (Pega case study)

- T-Mobile: 8-point increase in Net Promoter Score through NBA-driven interactions

- Oi (Brazilian telecom): $140 million incremental revenue in year one through NBA retention

The pattern across these cases: the most effective architectures do not merely react to scores. They arbitrate (weigh competing priorities) between multiple potential actions—discount, nurture message, proactive service call—by predicting the unique uplift of each action for each customer in their current context. This transforms retention from a reactive rule into a profit-optimizing calculation.

Stage 3: Autonomous Optimization

The frontier moves from recommendation to execution. AI agents perceive their environment, learn from outcomes, and continuously optimize toward business objectives with minimal human oversight.

Reinforcement learning-based dynamic pricing provides the clearest current examples:

- Uber: Surge pricing adjusts fares across thousands of micro-markets simultaneously, balancing driver supply with rider demand in real time

- Amazon: Adjusts prices on millions of items every few minutes, analyzing competitor prices, browsing history, and device type to infer price sensitivity

- Marriott: Analyzes over a decade of historical booking data alongside real-time factors (local events, weather, flight schedules, competitor pricing) to optimize room rates—prices sometimes change several times per day, delivering 3-7% revenue increase

A 2025 study demonstrated that deep RL algorithms (Proximal Policy Optimization) achieved near-optimal pricing and inventory results with 75.6% less computation time than traditional dynamic programming methods (Chen et al., 2025).

The vision extends beyond pricing. A fully autonomous lifecycle engine could modulate acquisition incentives, influence repeat purchase behavior, and manage churn risk—all through the lever of personalized pricing. The "action" becomes the price point; the objective function becomes long-term, risk-adjusted customer lifetime value.

Critical caveat for regulated industries: In insurance, lending, and financial services, autonomous pricing algorithms face significant legal constraints. Fair Lending laws (ECOA, Fair Housing Act) and state insurance regulations prohibit pricing based on protected characteristics. Crucially, algorithms often inadvertently reconstruct these characteristics (e.g., zip code as a proxy for race), creating "disparate impact" liability. An RL agent optimizing for profit will almost certainly learn to discriminate against protected classes unless explicitly constrained. Production implementations require fairness-aware RL with hard constraints on disparate impact, regular fairness audits, and human oversight with "kill switch" capabilities. The Uber/Amazon examples operate in unregulated domains; regulated industries must proceed with extreme caution.

Figure 1: Three-Stage Evolution

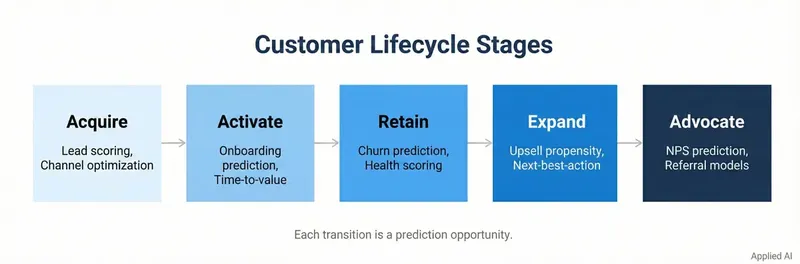

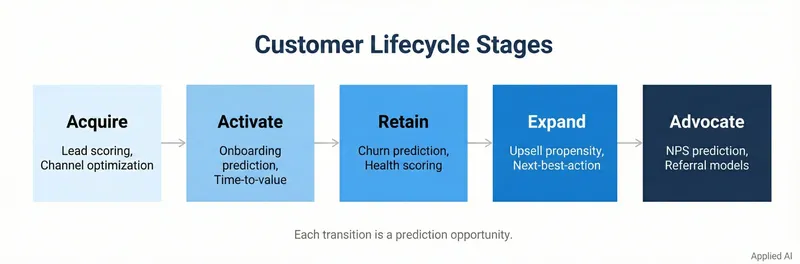

The Lifecycle Stages: What Actually Works

Different analytical techniques apply at different points in the customer journey. The optimal approach at acquisition differs fundamentally from retention.

Acquisition: Lead Scoring Has Crossed the Maturity Threshold

Machine learning for B2B lead scoring has moved from competitive advantage to table stakes. A 2025 study using real-world data from January 2020 to April 2024 tested fifteen supervised classification algorithms. The Gradient Boosting Classifier achieved 98.39% accuracy with ROC AUC of 0.9891 (Applied Sciences, 2025).

The most predictive features validate established marketing fundamentals: Lead Source, Reason for State, Lead Classification, Product interest, and Number of Responses. The technology is mature. Salesforce Einstein builds tailored models from organization-specific conversion history, handling data sparsity by leveraging anonymized cross-customer data when needed. HubSpot offers hybrid approaches combining rule-based and AI-assisted scoring.

The implementation challenge isn't technical. Success depends on a clean feedback loop where sales teams consistently update final lead status in the CRM. Without high-fidelity outcome data, even sophisticated models train on garbage. This dependency often forces organizations to address foundational data governance issues they previously tolerated.

Retention: The Churn Prediction Ceiling

Churn prediction has reached a performance ceiling. A 2025 meta-analysis across insurance, ISP, and telecom datasets found ensemble deep learning models and XGBoost consistently achieve 95-98% accuracy (Applied AI in Customer Management, 2025). The annual churn rate in telecommunications averages 15-25%—predicting it well is no longer differentiating.

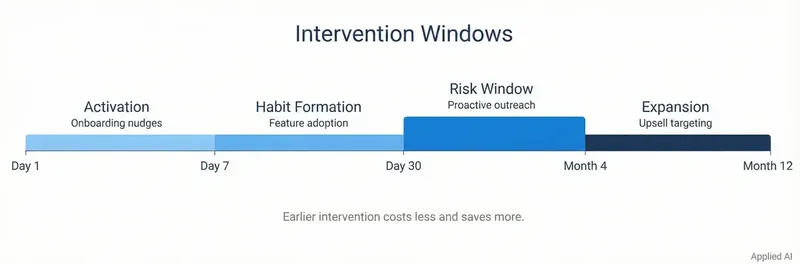

The frontier has moved to intervention optimization:

When to intervene: Early intervention windows (30-60 days after initial warning signals) show higher success rates than delayed efforts (180+ days). But optimal timing varies by industry, product, and customer segment.

Which intervention: The shift from rule-based ("high churn score → send discount") to uplift-optimized ("which action maximizes this customer's expected CLV net of intervention cost") represents the current competitive edge.

Integration challenge: The most accurate churn model delivers zero value if its output can't trigger a personalized retention offer in the customer's preferred channel within the critical intervention window. This is where NBA engines become essential.

Customer Lifetime Value: Beyond RFM

CLV modeling has evolved from simple heuristics to probabilistic frameworks that account for customer heterogeneity and uncertain "death."

Traditional RFM (Recency, Frequency, Monetary) segmentation describes what happened. It cannot predict what will happen.

Probabilistic models like BG/NBD (Beta-Geometric/Negative Binomial Distribution) make explicit assumptions about customer behavior:

- Customers make purchases according to a Poisson process with individual-specific rate λ

- Purchase rates vary across customers according to a gamma distribution

- After any transaction, a customer becomes inactive with probability p

- Dropout probabilities vary across customers according to a beta distribution

These models explicitly model customer "death"—the unobserved point at which someone stops being a customer—enabling more accurate value projections than simple extrapolation.

Machine learning approaches leverage features unavailable to probabilistic models: behavioral engagement (email opens, site visits), product affinity, support interactions, and contextual factors.

The strategic application: MetLife analyzes life events (marriage, home purchase) to predict evolving insurance needs and proactively offer relevant products. Allstate uses claim frequency and premium payment history to identify high-CLV customers at churn risk for targeted retention. Oracle Unity CDP provides pre-built CLV models used by automotive and retail clients to identify high-value customers for campaigns.

A critical insight: CLV must be profit-based, not revenue-based. A customer can easily generate negative value if Customer Acquisition Cost exceeds their lifetime contribution. This requires accurate cost allocation—a significant accounting challenge but essential for meaningful analysis.

The "Unprofitable VIP" problem: In retail, serial returners may rank in the top revenue decile while generating negative profit after return processing, shipping, and restocking costs. In insurance, a high-premium policyholder with frequent claims may appear high-value by revenue but destroy margin after loss ratios. Revenue-based CLV would prioritize retention of these customers; profit-based CLV correctly identifies them as destruction of value.

Figure 2: CLV Model Evolution

Next-Best-Action Architecture: The Integration Challenge

Models exist for each lifecycle stage: lead scoring, churn prediction, CLV estimation, price optimization. The strategic challenge is orchestrating them into coherent customer journeys.

The Real-Time Decisioning Stack

A production-grade NBA engine comprises multiple layers:

Data Integration Layer: Unifies customer data from CRM, transactional databases, behavioral event streams, and third-party enrichment sources. Snowplow provides behavioral data platforms that capture user activity in minute detail specifically for decisioning engines.

Feature Store: The critical bridge between offline training and real-time serving. Feature stores (Feast, Tecton, Databricks Feature Store) solve the training-serving skew problem: ensuring the features used to train models match exactly what's available at prediction time. They provide point-in-time correctness for training (preventing data leakage), low-latency feature serving for real-time inference, and versioning for reproducibility. Without a feature store, NBA engines suffer from subtle inconsistencies between development and production that silently degrade performance.

Feature Engineering: Transforms raw data into predictive features—recency/frequency calculations, engagement scores, predicted CLV, churn risk, product affinities, contextual signals (device type, location, time of day).

Model Orchestration: Manages multiple predictive models—each answering a specific question (Will they convert? Will they churn? What's their price sensitivity?)—and combines outputs into a coherent decision framework.

Business Rules and Constraints: Encodes logic that models can't capture: budget limits, communication frequency caps, product availability, regulatory restrictions, brand guidelines.

Action Selection: The core decisioning engine. For each customer interaction, evaluates all permissible actions, predicts expected outcome of each (using uplift models), selects the action maximizing the objective function (typically expected CLV or profit).

Channel Execution: Delivers selected action to the appropriate touchpoint—email, mobile push, web personalization, call center script, in-store POS—with sub-200ms latency requirements for real-time channels.

Feedback Loop: Captures outcomes, measures actual vs. predicted results, feeds data back to retrain models.

Figure 3: NBA Architecture Stack

Where Implementations Fail

The failure mode isn't individual models—ensemble models for churn prediction are well-established, uplift modeling libraries are mature. Breakdowns occur at integration:

Data Latency Conflicts: The churn model requires last night's batch processing. The behavioral model needs real-time event streams. The CLV model uses monthly aggregates. Synchronizing different refresh cycles while maintaining consistency is a significant engineering challenge.

Competing Objectives: The retention model wants to offer a discount. The margin optimization model wants to protect price. The inventory model wants to clear slow-moving stock. Without a unified objective function that explicitly weighs trade-offs, the system degenerates into competing recommendations.

Channel Limitations: The NBA engine determines optimal action for customer X is a personalized video via mobile app. Customer X doesn't have the app. Fallback logic that maintains strategic coherence across channel constraints is complex.

Organizational Silos: Marketing owns engagement models. Sales owns lead scoring. Finance owns CLV calculations. IT owns data infrastructure. Without cross-functional alignment, the NBA engine becomes a proof-of-concept that never reaches production.

MLOps: The Hidden Determinant

The primary barrier to capturing value from customer analytics isn't model sophistication. It's the operational maturity to deploy and maintain models at scale.

The MLOps Lifecycle

Data Engineering: Automated pipelines to ingest, validate, clean, and transform raw data. Apache Airflow for workflow orchestration, cloud-based warehousing for processing.

Experiment Tracking: Versioning code, data, hyperparameters, and performance metrics across experimental runs. MLflow provides open-source capabilities; cloud platforms offer managed services.

Model Deployment (CI/CD): Automated testing and deployment of trained models as production services. Uber's Michelangelo enables one-click deployment and manages over 5,000 production models handling 10 million predictions per second.

Model Monitoring: Continuous detection of data drift (changes in input distributions), concept drift (changes in relationships between features and outcomes), and performance degradation. Traditional code logic remains valid indefinitely. ML model performance decays the moment it meets live traffic.

Governance and Compliance: Model registries, access controls, fairness and explainability requirements, audit trails for regulatory compliance.

The Business Case: Production Evidence

- Netflix: Continuous delivery pipeline with A/B testing drives 20% increase in user engagement

- Uber: Michelangelo manages 5,000+ models at 10 million predictions/second—impossible without mature MLOps

- Starbucks: "Deep Brew" platform powers personalized offers, inventory optimization, and staffing—credited with significant revenue growth

- Adidas: MLOps-deployed targeting models achieve 20% increase in online sales

The pattern is consistent: organizations treating MLOps as infrastructure investment rather than technical overhead achieve sustained competitive advantage.

MLOps as Immune System

A mature MLOps practice functions as an organization's immune system for AI investments. It doesn't merely deploy capabilities—it provides the monitoring, defense, and adaptation necessary for long-term effectiveness.

Deploying an ML model into a business process is like an organ transplant—a foreign object introduced into a living system. Without support infrastructure, the "transplant" is rejected: predictions become inaccurate, business users lose trust, regulatory violations emerge, or upstream data changes break everything.

The MLOps framework provides defenses:

- Model monitoring acts as sensory system, scanning for threats like data drift

- Automated retraining provides adaptive response when threats are detected

- Governance ensures models adhere to rules on security, privacy, and ethics

- A/B testing serves as controlled clinical trial before full rollout

This explains why organizations investing heavily in MLOps (Netflix, Uber, Starbucks) successfully scale AI, while those treating it as a "deployment" task see projects fail to deliver lasting value.

The Emerging Frontier

Three research trends will define the next generation of customer analytics:

Causal Representation Learning (CRL)

Beyond estimating effects of known interventions toward discovering causal structures from observational data. Models learning causal relationships are inherently more robust and transferable than correlation-based alternatives. For marketing: discovering that a specific product feature causes higher engagement, which causes lower churn, enables upstream interventions impossible with correlation alone.

Maturity: Experimental. Active academic research with dedicated workshops at major AI conferences. Practical marketing applications nascent but potentially transformative.

Simulation-Based Planning

Digital twins and virtual market representations for testing complex strategies risk-free. Monte Carlo simulation models key variables (conversion rate, order value, cost-per-click) as probability distributions rather than fixed points. Thousands of simulations produce ROI probability distributions—richer decision support than point estimates.

Maturity: Early Adoption. Used in finance and operations for decades; marketing applications growing.

Agentic AI

Autonomous agents perceiving environments, reasoning, planning, and executing toward business objectives. "Agentic pricing" gives AI an objective ("maximize Q3 revenue for product line X") and empowers dynamic strategy management. The foundational components exist today—Uber and Amazon's systems are early specialized agents. Generalization toward broader objectives is the trajectory.

Maturity: Experimental. Critical research gaps in safety, control, and alignment. How do you ensure an autonomous pricing agent doesn't learn harmful strategies (price gouging damaging brand)? How do you build robust guardrails?

The Path Forward

The trajectory is clear: from prediction to causation to prescription to autonomy. But the path isn't linear, and most organizations aren't prepared for later stages before mastering earlier ones.

Stage 1 requires analytical rigor: Running controlled experiments, building uplift models, establishing causal relationships. This is primarily a data science capability—and most organizations haven't built it.

Stage 2 requires operational excellence: Deploying NBA engines, integrating across channels, delivering recommendations with sub-200ms latency. This is marketing operations and engineering capability.

Stage 3 requires strategic trust: Allowing autonomous agents to make decisions, setting appropriate objective functions, building guardrails. This is leadership and governance capability.

Most organizations fail not because they lack sophisticated models, but because they attempt Stage 2 without mastering Stage 1, or pursue Stage 3 without MLOps maturity to maintain it.

The winning approach:

- Start with causal understanding (uplift modeling on controlled experiments)

- Build operational capability (NBA infrastructure with robust data pipelines)

- Only then explore autonomous optimization (RL-based systems with clear constraints)

The models are commoditizing. MLOps maturity and cross-functional integration are the differentiators. Organizations building this foundation now will capture disproportionate value as capabilities mature. Those waiting for perfect technology will find themselves perpetually behind.

Further Reading

Academic Foundations

- Devriendt, F., et al. (2021). "A Survey of Methods for Model-Free Reinforcement Learning in Marketing." Journal of Revenue and Pricing Management.

- Athey, S., & Imbens, G. (2016). "Recursive Partitioning for Heterogeneous Causal Effects." PNAS.

- Fader, P.S., & Hardie, B.G.S. (2005). "RFM and CLV: Using Iso-value Curves for Customer Base Analysis." Journal of Marketing Research.

Industry Research

- Forrester Wave: Real-Time Interaction Management, 2024

- Deloitte: State of AI in the Enterprise, 2024

- McKinsey: The State of AI in 2024

Implementation Resources

- scikit-uplift: Python library for uplift modeling

- EconML: Microsoft's library for causal inference

- MLflow: Open-source MLOps platform

Related: Causal ML for Marketing provides detailed methodology for uplift modeling and the four customer personas framework.