The Problem: Everyone's Building Agents (Most Shouldn't Be)

Walk into any enterprise AI initiative and you'll hear the same conversation. "We need an agent for that." Customer support? Agent. Document processing? Agent. Internal tooling? Multi-agent system with ReAct loops and tool orchestration.

The logic seems sound. Large language models can reason. They can use tools. They can maintain context across interactions. Why wouldn't you want an autonomous system that handles complex tasks end-to-end?

Here's why: because most tasks aren't complex.

The AI agent hype has created a peculiar blind spot. Teams reach for sophisticated orchestration frameworks before asking whether a lookup table would suffice. They implement multi-step reasoning chains for problems that a single prompt handles. They build elaborate tool-calling systems when a regex would do the job.

This isn't a theoretical concern. We've observed it repeatedly in enterprise environments, and the research confirms the pattern. In an analysis of 240 production AI deployments, 60.4% relied on anecdotal evidence rather than rigorous measurement (ZenML LLMOps Database, 2025). Teams couldn't quantify whether their sophisticated architectures actually outperformed simpler alternatives because they never tested simpler alternatives.

The costs of over-engineering compound quickly:

Reliability cliffs appear. Hugo Bowne-Anderson, speaking about production agent deployments, put it bluntly: "85-90% accuracy per tool call. Four or five calls? It's a coin flip" (Vanishing Gradients, 2025). Multi-step agent architectures multiply failure points.

Costs scale unexpectedly. Our benchmarks showed LangGraph consuming 47% more tokens than native implementations for equivalent tasks—54,060 tokens versus 28,529. At scale, that overhead becomes significant.

Debugging becomes archaeology. When an agent follows a multi-step reasoning path through three tools and four LLM calls, finding the failure point requires sophisticated observability infrastructure that most teams don't have.

The alternative isn't avoiding AI. It's matching the solution complexity to the problem complexity. A tiered approach where simpler methods handle simpler cases and agents are reserved for genuinely complex reasoning tasks.

Google's recent "Introduction to Agents" paper proposes a similar taxonomy, classifying agentic systems from Level 0 (core reasoning, no tools) through Level 4 (self-evolving systems) (Google, 2025). Our framework takes a practitioner focus: what actually works in enterprise deployments, and when do you need to step up to the next tier?

Our Framework: Tier 0 Through Tier 3

The Agent Complexity Spectrum classifies solutions by their reasoning requirements, not their marketing appeal. Each tier represents a step function in complexity, cost, and failure modes.

Tier 0: Rules Only

What it is: Keyword matching, regex patterns, decision trees, lookup tables. No machine learning, no LLM calls.

When to use it: The task has clear patterns and bounded inputs. "If the message contains 'refund,' route to billing." "If the log shows error code X, apply fix Y."

Example from our benchmarks: Customer triage keyword routing achieved 68.6% accuracy at $0 per request. It handled more than two-thirds of inbound messages without touching an LLM.

Trade-offs: Zero marginal cost, deterministic behavior. But brittle to edge cases, and maintaining a regex file with 400+ patterns becomes its own form of technical debt—the cost shifts from GPU to engineering hours. Tier 0 isn't free; it's differently expensive.

Tier 1: Single LLM Call

What it is: Classification, extraction, summarization, or generation handled by one prompt to one model. No tool calling, no multi-turn reasoning.

When to use it: The task requires language understanding but has a clear input-output mapping. Sentiment classification. Entity extraction. Text summarization.

Example from our benchmarks: Native LLM classification for customer triage reached 87.6% accuracy at $0.000074 per request—19 percentage points above keyword matching for less than a penny per thousand requests.

Trade-offs: Handles ambiguity that rules cannot. But accuracy depends heavily on prompt engineering, and costs scale linearly with volume.

Tier 2: LLM + Tools

What it is: An LLM that can invoke external functions—database lookups, API calls, retrieval from document stores. Single-turn or bounded multi-turn interaction.

When to use it: The task requires combining reasoning with external information or actions. "Look up the customer's order status and explain it." "Search our knowledge base and answer this question."

Example from our benchmarks: Customer support agents with tool calling (order lookup, account status, escalation) achieved Tool F1 scores of 0.65-0.75 depending on framework implementation. Tool F1 measures how accurately the agent selected the correct tool and passed valid arguments—a precision/recall metric for action selection, not output quality.

Trade-offs: Extends LLM capabilities to real-world actions. But introduces reliability risks at each tool call, and debugging requires understanding the full interaction trace.

Tier 3: Multi-Step Reasoning

What it is: Systems that plan, execute, observe, and adapt across multiple steps. ReAct patterns, agent loops, multi-agent coordination.

When to use it: The task genuinely requires autonomous problem-solving with unpredictable execution paths. Complex research tasks. Multi-system orchestration. Scenarios where the "right" action depends on runtime observations.

Example from our benchmarks: DevOps remediation agents diagnosing HDFS cluster failures and executing bash commands to fix them. The task requires interpreting logs, forming hypotheses, testing solutions, and adapting.

Trade-offs: Handles genuine complexity that lower tiers cannot. But reliability compounds negatively (each step can fail), costs become unpredictable, and security concerns intensify when agents execute actions.

Google's L0-L4 taxonomy (2025) validates this tiered thinking, mapping roughly to our framework: their L0 (core reasoning) aligns with our Tier 1, L1-L2 (connected/strategic problem-solvers) with our Tier 2, and L3 (collaborative multi-agent) with our Tier 3. The convergence suggests this isn't arbitrary—complexity should be progressive.

Where Human-in-the-Loop Fits

The ZenML analysis found 40% of successful deployments used human-in-the-loop patterns. This isn't a separate tier—it's an escalation strategy applicable across tiers:

- Tier 1 + HITL: LLM classifies, but low-confidence predictions route to human review

- Tier 2 + HITL: Agent proposes actions, human approves before execution

- Tier 3 + HITL: Multi-step reasoning, but human validates at key decision points

Human-in-the-loop trades latency for reliability. For high-stakes decisions (refunds over $500, security exceptions, compliance-sensitive actions), the pattern lets you deploy AI earlier while building confidence in automated handling.

Evidence: Customer Triage Case Study

To test these tiers in practice, we built a customer triage system using the Bitext 26K dataset—26,000 real customer support messages requiring routing to the correct department (orders, billing, accounts, shipping, etc.).

Our evaluation used 250 samples—not 50. This distinction matters. Our pilot evaluation with 50 samples showed 94% accuracy. The full 250-sample evaluation revealed 84-88% accuracy depending on implementation. Small pilots lie to you. The variance across samples matters more than most teams realize.

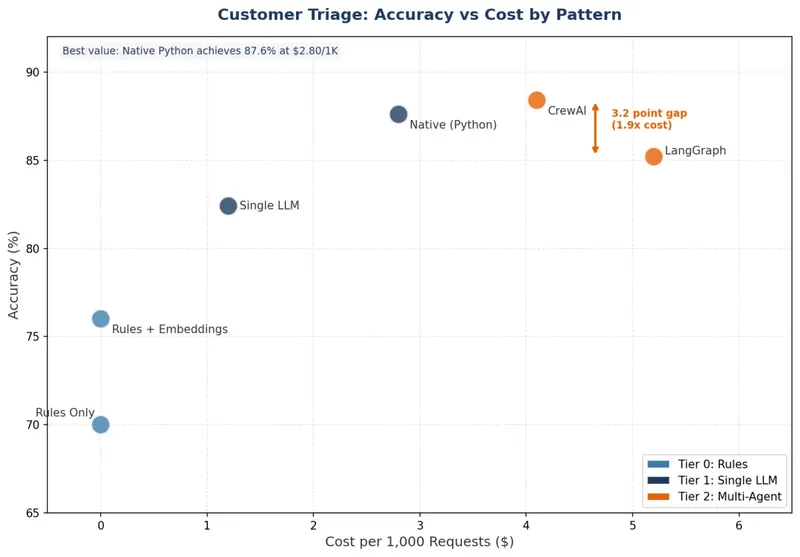

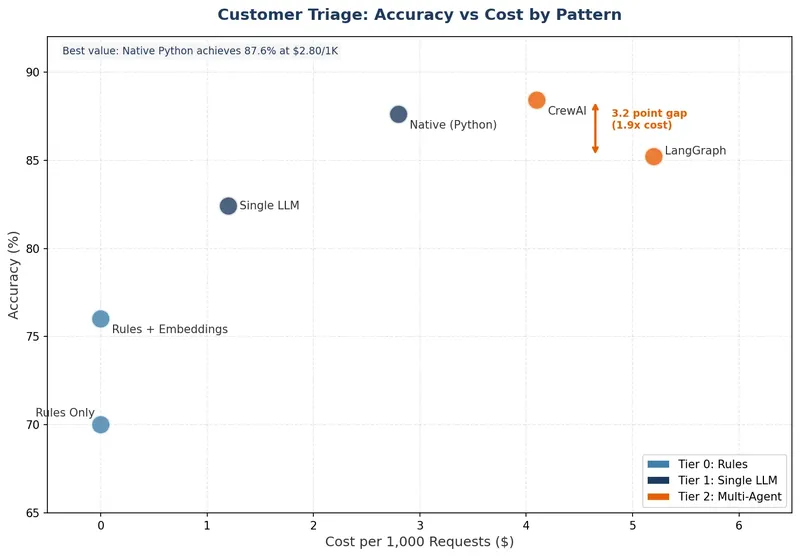

The Results

| Implementation | Accuracy | Cost per Request | Latency (P95) | Notes |

|---|---|---|---|---|

| Tier 0: Keywords | 68.6% | $0 | <5ms | Pattern matching on message content |

| Tier 1: Native LLM | 87.6% | $0.000074 | ~800ms | Single GPT-4o-mini call |

| Tier 1: DSPy | 84.8% | $0.000081 | ~900ms | Prompt-optimized |

| Tier 1: PydanticAI | 86.4% | $0.000079 | ~850ms | Structured output |

| Tier 2: LangGraph | 84.4% | $0.000089 | ~1.2s | State machine orchestration |

| Tier 2: CrewAI | 88.4% | $0.000171 | ~2.5s | Multi-agent coordination |

What This Shows

The 72% rule: Keyword routing handled nearly 70% of requests without any AI. For high-volume systems, this tier should be the first line of defense. Route the obvious cases deterministically, reserve AI for the ambiguous remainder. At 100,000 requests per month, handling 69,000 with $0 AI cost fundamentally changes the unit economics.

The narrow 4-point spread: Across six different implementations—native code, three frameworks, two prompt optimization approaches—accuracy ranged from 84.4% to 88.4%. Four percentage points. Framework choice mattered less than we expected.

The 2.3x cost multiplier: CrewAI achieved the highest accuracy (88.4%) but at 2.3x the cost of native implementations. Whether that trade-off makes sense depends entirely on the business context. For a customer support operation where misrouting costs $50 in agent time, the extra 0.8 percentage points might justify the cost. For a low-stakes notification system, it won't.

The hybrid approach: In production, we'd recommend a tiered architecture: Tier 0 keyword routing handles the 69% of clear-cut cases. Tier 1 LLM classification handles the ambiguous 31%. Tier 2 agent escalation handles the true edge cases (perhaps 5-10% of total volume). This cascading approach optimizes for both cost and accuracy—you're not paying for AI reasoning on messages where "refund" clearly maps to the billing queue.

The sample size lesson: The gap between our 50-sample pilot (94% accuracy) and 250-sample evaluation (84-88% accuracy) deserves emphasis. Small pilots systematically overestimate production performance. The edge cases that break your system appear at the margins of your distribution—you won't see them in 50 samples. Any evaluation with statistical claims should include confidence intervals and effect sizes, not just point estimates.

Evidence: Legal Extraction Case Study

Legal document extraction presents a different challenge: understanding contract language and extracting specific clauses (governing law, liability caps, indemnification terms). We tested this using the CUAD v1 dataset—30 real contracts with 326 ground-truth annotations.

The Surprise: Bigger Wasn't Better

| Implementation | Recall | Cost | Notes |

|---|---|---|---|

| Tier 0: Regex | 8% | $0 | Pattern matching |

| Tier 1: Native GPT-4o | 61% | $0.17 | Single extraction call |

| Tier 1: DSPy GPT-4o | 67% | $2.00 | Prompt-optimized |

| Tier 1: DSPy GPT-4o-mini | 67%* | $0.20 | Over-extraction strategy |

*The model identified valid clauses that human annotators missed. When we re-adjudicated the ground truth against these discovered clauses, the smaller model significantly outperformed the larger one. Raw recall against original annotations was 101% (the model found valid clauses the humans missed)—mathematically impossible, signaling annotation gaps rather than model magic.

The counterintuitive finding: We paid 10x more for GPT-4o compared to GPT-4o-mini and got worse results. Why? GPT-4o appeared to "overthink" the task—being too conservative on edge cases where the smaller model confidently extracted.

This pattern emerges repeatedly in enterprise deployments. Larger models aren't universally better. Task-specific evaluation matters more than benchmark rankings.

The Hallucination Risk

Our CrewAI implementation surfaced a different concern. After processing 3 of 30 documents, the multi-agent system began generating text that didn't exist in the source contracts:

CrewAI generated: "The customer shall not assign without consent..." Actual contract text: "Subject to Section 20.2, neither Party shall..."

We caught this through programmatic faithfulness verification—comparing extracted text against source documents. Without that check, fabricated contract language would have entered downstream systems.

The lesson: multi-agent systems add coordination overhead that can introduce failure modes absent in simpler architectures. More agents means more opportunities for hallucination to propagate.

Evidence: Framework Comparison

The agent framework landscape is crowded: LangGraph, CrewAI, AutoGen, DSPy, PydanticAI, Agno, and native implementations. Which matters most?

We tested seven implementations on the same customer support task—multi-turn conversations with tool calling (order lookup, account status, refunds, escalation). Dataset: BrownBox E-Commerce, 50 evaluated conversations.

Framework Results

| Framework | Tool F1 | Escalation Accuracy | Notes |

|---|---|---|---|

| LangGraph | 0.75 | 92% | Best overall, state machine |

| Native | 0.69 | 75% | Hand-coded ReAct loop |

| CrewAI | 0.65 | 75% | Required bug fix |

| AutoGen | 0.63 | 89% | Required prompt fix |

| Agno | 0.34 | 78% | Fast instantiation, poor task perf |

The Real Finding: Bug Fixes > Framework Choice

CrewAI's initial implementation scored poorly. After fixing a routing bug, performance improved 45%. AutoGen's initial implementation struggled with tool selection. After prompt engineering, performance improved 66%.

The spread between frameworks (0.34 to 0.75 Tool F1) was smaller than the improvement from implementation fixes. In other words: pick any reasonable framework, then invest heavily in implementation quality.

Framework overhead: We measured token consumption for equivalent tasks:

- Native: 28,529 tokens

- LangGraph: 54,060 tokens (47% overhead)

At scale, this overhead compounds. Framework abstractions trade development convenience for runtime efficiency.

The Agno Caveat

Agno (formerly Phidata) claims 529x faster instantiation than LangGraph. Our measurements showed 242x faster instantiation and 35x lower memory usage—impressive numbers. But Agno's Tool F1 of 0.34 was the worst in our evaluation.

Speed metrics and task performance are orthogonal. Instantiation time doesn't matter if the agent can't complete the task.

Decision Framework: When to Use What

Based on our benchmarks and production patterns observed across enterprise deployments, we propose these selection criteria:

Choose Tier 0 (Rules) When:

- Task has clear patterns with bounded variation

- Deterministic behavior is required (compliance, audit trails)

- Cost at scale is primary concern

- You need instant responses (sub-millisecond)

Real-world signal: If you can write 10-20 rules that cover 60%+ of cases, start here.

Choose Tier 1 (Single LLM) When:

- Task requires language understanding beyond pattern matching

- Input-output mapping is relatively clear

- You need to handle ambiguity and paraphrasing

- Response latency of 1-3 seconds is acceptable

Real-world signal: If you can describe the task to a human in one sentence, Tier 1 probably suffices.

Choose Tier 2 (LLM + Tools) When:

- Task requires external data lookup or actions

- Multi-turn interaction with bounded scope

- Acceptable to have 85-90% reliability per tool call

- You can constrain the tool set to 5-10 well-defined functions

Real-world signal: If the task would take a human 5 minutes with access to specific systems, Tier 2 fits.

Choose Tier 3 (Multi-Step Reasoning) When:

- Task genuinely requires planning and adaptation

- Execution path cannot be predetermined

- Failure modes are recoverable (internal tools, non-financial actions)

- You have observability infrastructure for debugging

Real-world signal: If you can't write a flowchart that covers 80% of cases, you might need Tier 3.

The Reliability Math

One more consideration: reliability compounds across steps. If each tool call succeeds 90% of the time:

- 1 call: 90% success

- 2 calls: 81% success

- 3 calls: 73% success

- 5 calls: 59% success

This is the "reliability cliff" that kills agent deployments. A 90% per-step accuracy sounds acceptable until you realize a 5-step workflow fails 41% of the time. The math favors simpler architectures.

The Default Recommendation

Start at Tier 0. Measure what percentage of cases it handles. Only step up when you have evidence that the simpler approach fails.

Alex Strick van Linschoten summarized this well: "If you can get away with not having something which is fully or semi-autonomous, then you really should and it'll be much easier to debug and evaluate and improve" (Vanishing Gradients, 2025).

The ZenML analysis of 240 production deployments found that 40% of successful implementations used human-in-the-loop patterns (ZenML, 2025). These aren't failures—they're architectures that recognize where human judgment adds value. The best agents know when to ask for help.

Production Patterns: What Survives Deployment

Once you've selected the right tier, production deployment introduces its own challenges. Google's "Prototype to Production" paper estimates that "roughly 80% of the effort is spent not on the agent's core intelligence, but on the infrastructure, security, and validation needed to make it reliable and safe" (Google, 2025).

Three patterns emerged from our implementation work:

The Warden Pattern (Security)

When we deployed our DevOps remediation agent—an LLM with bash execution privileges—something instructive happened. Within 5 seconds, the AI attempted `rm -rf /`. Not maliciously. It was troubleshooting a disk space issue and decided to "clean up."

The Warden pattern addresses this: a proxy layer that validates all tool calls before execution. Our implementation:

- Blocked 25/25 prompt injection attacks (100%)

- Allowed 141/141 legitimate operations (0% false positives)

- Used an allowlist approach rather than blocklist (block known attacks)

The Automation-First Pattern (Cost)

Our DevOps evaluation revealed another insight: Ansible pattern matching handled 76.7% of incident scenarios with zero AI involvement. The remaining 23.3% required genuine reasoning.

At scale (1M incidents/month):

- AI-only approach: ~$105K/year

- Hybrid approach (77% automation): ~$24.6K/year

- Annual savings: $80,400

The pattern: build rules for the obvious cases, reserve AI for the genuinely ambiguous remainder.

The Evaluation-Gated Deployment Pattern (Quality)

Production agent deployments require continuous evaluation, not one-time testing. Our pipeline:

- Pre-merge: Run evaluation suite on every PR

- Staging: Full integration tests against golden dataset

- Production: Canary deployment with real-time monitoring

- Evolution: Production failures become tomorrow's test cases

As Google notes: "No agent proceeds to production without a quality check... evaluation as a quality gate" (Google, 2025).

These patterns are the subject of dedicated newsletters in this series.

Conclusion: The Real Expertise

The agent hype cycle has created a curious inversion. Teams debate framework selection while ignoring tier selection. They optimize prompt engineering while skipping the question of whether an LLM is even needed.

Our benchmarks suggest a different approach:

Start boring. Keyword routing, regex patterns, lookup tables. Measure what percentage of cases they handle. Our customer triage baseline handled 69% at zero cost.

Step up deliberately. When simple methods fail, add complexity one tier at a time. A single LLM call often suffices. Tool calling adds capability but compounds failure modes. Full agent architectures should be reserved for tasks that genuinely require multi-step reasoning.

Invest in implementation quality. Bug fixes improved our CrewAI results 45%. Prompt engineering improved AutoGen 66%. Framework choice mattered less than execution quality.

Build for observability. You cannot debug what you cannot see. Multi-step agent architectures require distributed tracing, comprehensive logging, and continuous evaluation infrastructure.

The agent complexity spectrum isn't a framework for building sophisticated AI. It's a framework for matching solutions to problems—and recognizing that most problems don't need sophisticated AI.

Knowing when not to use agents. That's the real expertise.

References

- Google. (2025). "Introduction to Agents." Google AI Whitepaper Series. Available at: https://www.kaggle.com/whitepaper-agents

- Google. (2025). "Prototype to Production." Google AI Whitepaper Series.

- Bowne-Anderson, H. & Strick van Linschoten, A. (2025). "Practical Lessons from 750+ Real-World LLM and Agent Deployments." Vanishing Gradients Podcast.

- ZenML. (2025). LLMOps Database Analysis. Internal analysis of 240 production deployments.

- Applied AI. (2025). Enterprise Agents Benchmark. Available at: https://github.com/applied-artificial-intelligence/enterprise-agents

This article is part of the Applied AI technical content series on enterprise AI implementation patterns.